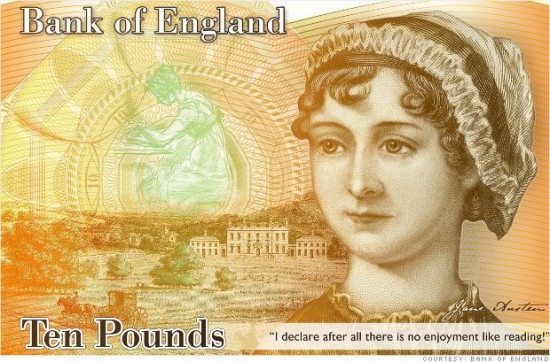

What do you do when someone successfully campaigns to have a woman featured on the next edition of the ten pound note? Well, if you’re a semi-moron with all the social skills of a decapitated earthworm, you threaten to rape them. Makes sense? No, not to me either. But then, I do have an IQ high enough to stand out from plankton.

I don’t think anyone with sufficient grasp of the English language to be capable of actually reading this article would argue that Caroline Criado-Perez “deserved” to be abused, or even that the abuse she received was anything other than completely unacceptable. The debate is not about the nature of the abuse, but about the most appropriate response to it.

Now, I don’t accept the argument that Ms Criado-Perez should simply have ignored the tweets and/or blocked their senders. Up to a point, certainly, it is necessary to put up with the rough and tumble of public debate if you want to engage with social media, and the occasional abusive, moronic or foul-mouthed response is part of that. But a sustained and seemingly coordinated campaign of abuse goes well past that point. This wasn’t just a small handful of idiots shouting off in public, it was a deliberate attempt to foment abuse in order to intimidate. And that is beyond anything that any user of Twitter should have to put up with.

To that extent, therefore, I am in the “something must be done” camp. But the question is, what should be done?

There are two things that I think definitely should not be done. One is for Twitter itself to take on the responsibility of monitoring every tweet for abusive content. While seemingly attractive, the logistics of that make it completely impractical. To put things into perspective, the Guardian’s “Comment is Free” section attracts around 600,000 comments a month. To monitor that, the Guardian employs twelve moderators. Even then, they don’t spot everything – in many cases, it takes an alert from a user before something objectionable is removed. But that’s a drop in the ocean compared to social media. Twitter generates around 60,000,000 tweets a day. To monitor that, Twitter would need to employ nearly 37,000 moderators. Which is about a hundred times as many people as currently work for Twitter. Even Google doesn’t have anywhere near that number of employees. The idea that it would be practical, and cost-effective (remembering that Twitter has no income stream other than advertising), to recruit, train and deploy so many staff is utterly ludicrous.

OK, so why not do it automatically? Why not use software to filter out abuse?

This is what prompted this article. Yesterday, I tweeted that

The idea that Twitter could actively moderate all tweets is ludicrous, So is the idea that tweets are somehow immune to the law.

I was specifically addressing the human moderation question there, but someone responded to me with

Email systems actively moderate emails, removing spam while letting good messages through. Far from impossible

You can see the rest of that conversation here, but it quickly reached the point at which 140 characters isn’t enough to explain the fairly complex issues involved. Hence this article.

OK, so why not use software to do it? Well, there are a number of reasons why an analogy with email spam is inappropriate. Here are just some of them.

For a start, email comes with a huge amount of meta data (the headers) which give away a lot of detail about the source of the message. Contrary to popular belief, most email spam isn’t blocked because of an analysis of the content, it’s blocked because it’s sent by known spammers or because it’s otherwise not a valid message. Looking at the logs of my own mail server, the top four reasons for blocking or filtering spam are, in order:

- Sent to invalid recipients.

- The sending server doesn’t have a valid hostname.

- The sending server is in the Spamhaus “Zen” black hole list.

- The content filter I use has determined it to be spam.

Of these, the top three have no equivalent in social media. Yet they are the only ones which are sufficiently reliable to allow messages rejected by them to be completely dropped. The last, content-based filtering, requires a regular review of my spam folder just in case something has been wrongly filtered. (And, by the way, if you think that your spam filters are perfect because you never see any spam, then that’s only because you never see the emails that have been wrongly blocked, but shouldn’t. No content-based filter is ever 100% perfect).

If we’re going to use software to filer Twitter, then it has to be entirely content-based. But Twitter still lacks a lot of the features which make filtering email spam (relatively) easy. One of those is that email spam is, by definition, unsolicited. But there’s no equivalent concept on Twitter. If you have a public profile, then anyone you haven’t blocked can @tweet you. If we’re going to use solicitation as a factor, then we’re just back to the position I’ve already rejected at the start: blocking offenders. Equally, the other thing that the vast majority of email spam has in common is that it’s either trying to sell you something or scam you into doing something. Scams and adverts are not unknown on Twitter, but, unlike email, they’re not a huge problem.

The reality is that the problem on Twitter isn’t spam, it’s abuse. So an analogy with spam filtering, in any medium, is always going to be flawed. But suppose we tried content-based filtering anyway? How effective is it going to be?

The answer, I think, is “not very”. Or possibly even “barely”. Taking the rather distasteful topic which prompted the debate, it’s going to be very difficult indeed (as in, pretty much impossible) to come up with an algorithm which will filter out the abuse levelled at Caroline Criado-Perez, but won’t filter out references to this poem or this organisation or even this agricultural crop. And if we cut out swear words as well, then residents of Scunthorpe may have a problem. And so, definitely, will this little village in Austria.

So, there isn’t anything that Twitter can usefully do to pre-emptively address abuse. But what can be done?

Firstly, of course, one thing that can definitely be done is to report it to the police. Contrary to what seems to be popular opinion, Twitter isn’t beyond the reach of the law. There have already been cases of people being successfully prosecuted for offensive tweets, and the number is rising. A lot of the criticisms of both Twitter and the police in this particular case have been misplaced; we don’t live in a dictatorship where the police can simply snatch someone off the streets on the basis of an unproven allegation, things have to be investigated and action taken in accordance with the law. I am certain that there will be convictions over the abuse of Ms Criado-Perez, but many of the more hot-headed commentators will already have moved on to their next issue of the day and will fail to report it, thus adding to the misconception that nothing has been (or will be) done.

The other thing that can be done is for Twitter to make it easier to block and report abusers. Compared to Facebook, where that can be done in just a couple of clicks directly from the offending message, Twitter does make the process unnecessarily convoluted. In fact, I think this alone would have the single biggest impact, because it would make it much harder for the trolls to keep up a sustained campaign of abuse. It could even be done by means of drag and drop: Twitter’s normal interface is essentially Javascript-based so it would be relatively simple to add the ability to multi-select messages and drag them all into a “sin bin”.

Other potentially useful options would be to have some kind of probation period for new accounts. A lot of trolls use throwaway accounts set up specifically for the purpose of abusing their victims. Maybe having to wait a set period of time before being able to direct a message to a particular person would be a way of keeping this in check. And, although automatically filtering individual messages is close to impossible, coming up with some kind of “trollishness” score is far easier. Maybe accounts which reach a certain level could be restricted in some way – again, losing the ability to direct tweets to other users would be a helpful start. This would obviously need to be subject to appeal, in case of false positives (of which there will inevitably be some), but focussing on the users is likely to be far more productive than looking at content alone.

One of the things which has to be considered in any assessment, either manual or automated, of users, though, is that malicious accusations of abuse are themselves abuse. That’s why the calls for a “zero tolerance” policy are misguided; if person A’s account can be suspended just because person B accuses A of abusing them then that makes it all too easy for the trolls to turn round and start falsely claiming to be the victims in order to force their opponents off Twitter.

I’d also add, in passing, that neither Twitter nor the authorities can really win here. Drawing the line between tasteless jokes and actual abuse is difficult, and everyone has their own opinion of where it should be drawn. Freedom of speech must, of necessity, include the freedom to be offensive, otherwise it isn’t actually free in any meaningful sense. But, on the other hand, freedom to speak is not the same as freedom to harm, and abusive language can be genuinely harmful. Freedom of speech extends to the expression of opinions that I fundamentally disagree with and even find repulsive. But it does not extend to a right to tell deliberate lies or deliberately mislead and deceive.

Social media is still relatively new. It isn’t surprising that, every now and then, something comes along which illustrates one of its weaknesses. It’s a healthy debate to be having. But we can only solve these problems if we start from an understanding of the nature of the medium.